NVM Express is a recent standard that has been designed to speed up access to data on non-volatile memory-based media such as SSDs. Approved in 2011, the standard was quickly adopted and is now widely used by SSD, server and storage system manufacturers.

NVMe relies on a greatly reduced and simplified set of commands compared to the SCSI command set - with three essential commands (Read, Write, Flush) for data access (and eight additional optional commands) and 10 command commands. compulsory administration (plus 5 optional). So there is an extreme 26 NVMe commands against almost 200 SCSI commands.

The implementation of NVMe in modern systems has significantly increased data access performance stored on PCIe Flash storage media, but NVMe retained a limitation. Because of its dependency on the PCIe bus, it was until very recently impossible to benefit from the gains induced by the use of NVME outside PCs and servers. It is this limitation that wants to make NVMe over Fabrics (NVMf) disappear by extending the use of the NVMe command set outside the servers.

NVMe over Fabrics: NVMe applied to fast networks

The NVM Express over Fabrics specification defines an architecture for implementing the NVMe command set on fast storage arrays supporting RDMA (InfiniBand, 10G / 40G and 100G Ethernet) or Fiber Channel (FC-NVMe). On Ethernet networks, the implementation of NVMe over Fabrics requires the support of RDMA technology, either RoCE (RDMA over Converged Ethernet) or iWARP (Internet Wide Area RDMA Protocol).

Work on the NVMe over Fabrics specification began in 2014 and was completed in June 2016 with the release of version 1.0 of the standard. The purpose of NVMe over Fabrics is to ensure that the use of a network fabric does not have more than 10 microseconds of latency impact on the processing of an I / O operation, as compared to use of NVMe in a server. This promise is largely driven by the first systems available on the market such as Apeiron, E8 Storage or Mangstor .

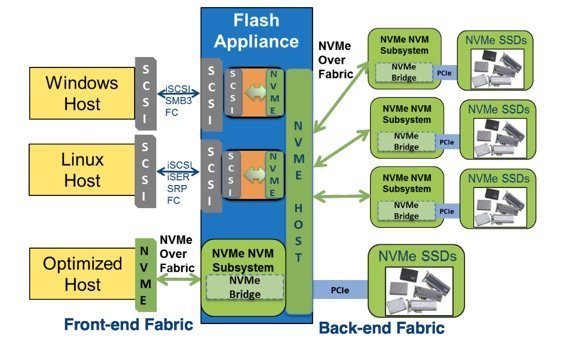

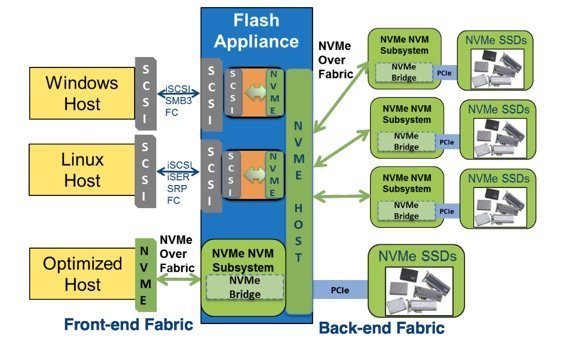

Possible architecture of a future Flash array with NVMe disk drawers over fabrics

The differences between NVMe locally and NVMf

Essentially, the NVMe over Fabrics protocol takes over the commands of the local PCIe PCI NVMe specification. But in order to manage the differences between the PCIe bus and an extended network fabric, it was necessary to make adjustments. These arrangements are similar in principle to those that had been made to SCSI to enable its operation over LANs (iSCSI). They are summarized in the table below (source: NVM Express, Inc.)

To transmit NVMe commands and responses over a network fabric, the original protocol and message system mechanisms had to be adapted to encapsulate NVMe commands and responses. The protocol provides for the use of a "capsule" mechanism that contains one or more NVMe commands or responses to these commands. These capsules are independent of the fabric technology used.

To identify the storage devices connected to the fabric, NVMe over Fabrics has taken over the familiar iSCSI addressing convention . The qualified name of each device is similar to an iSCSI qualified name (IQN).

NVMe over Fabrics is still a young specification, but the first systems using the standard have appeared on the market, often with proprietary driver elements. As usual, it will take some time for the standard drivers of operating systems to mature to see a wider adoption of technology. This probably means that we will see the first uses in 2017, but that the mass adoption of technology should really be done from 2018.

NVMe frees the power of Flash storage

In PCs and servers, the NVMe protocol relies on the PCIe bus to access Flash storage. By relying on the second fastest system bus of modern systems (behind the memory bus), the designers of the standard wanted to reduce latency of data access, but also increase parallelism (up to 64,000 queues). 'I / O in parallel) and performance. The command set to access the data has also been adapted to nonvolatile storage media. The old SCSI command set was designed to handle hard drive-type rotating media and had become an obstacle to taking advantage of the intrinsic capabilities of Flash storage.

In PCs and servers, the NVMe protocol relies on the PCIe bus to access Flash storage. By relying on the second fastest system bus of modern systems (behind the memory bus), the designers of the standard wanted to reduce latency of data access, but also increase parallelism (up to 64,000 queues). 'I / O in parallel) and performance. The command set to access the data has also been adapted to nonvolatile storage media. The old SCSI command set was designed to handle hard drive-type rotating media and had become an obstacle to taking advantage of the intrinsic capabilities of Flash storage.

NVMe relies on a greatly reduced and simplified set of commands compared to the SCSI command set - with three essential commands (Read, Write, Flush) for data access (and eight additional optional commands) and 10 command commands. compulsory administration (plus 5 optional). So there is an extreme 26 NVMe commands against almost 200 SCSI commands.

The implementation of NVMe in modern systems has significantly increased data access performance stored on PCIe Flash storage media, but NVMe retained a limitation. Because of its dependency on the PCIe bus, it was until very recently impossible to benefit from the gains induced by the use of NVME outside PCs and servers. It is this limitation that wants to make NVMe over Fabrics (NVMf) disappear by extending the use of the NVMe command set outside the servers.

NVMe over Fabrics: NVMe applied to fast networks

The NVM Express over Fabrics specification defines an architecture for implementing the NVMe command set on fast storage arrays supporting RDMA (InfiniBand, 10G / 40G and 100G Ethernet) or Fiber Channel (FC-NVMe). On Ethernet networks, the implementation of NVMe over Fabrics requires the support of RDMA technology, either RoCE (RDMA over Converged Ethernet) or iWARP (Internet Wide Area RDMA Protocol).

Work on the NVMe over Fabrics specification began in 2014 and was completed in June 2016 with the release of version 1.0 of the standard. The purpose of NVMe over Fabrics is to ensure that the use of a network fabric does not have more than 10 microseconds of latency impact on the processing of an I / O operation, as compared to use of NVMe in a server. This promise is largely driven by the first systems available on the market such as Apeiron, E8 Storage or Mangstor .

Multiple possibilities of use

NVMe over Fabrics can be used like Fiber Channel or iSCSI to connect servers to fast flash storage systems that are equipped with NVMe disks. By adopting such an approach, it is possible as now to build centralized storage systems, simple to administer, but offering performance similar to that which could be offered by local NVMe disks to servers. Only problem, in the current state of technology, it is difficult to combine performance and rich storage services. Most of the NVMe arrays on the market offer high performance, basic volume management capabilities, but no snapshot, replication, or cloning services. All these services must be delivered at the server level by a suitable software layer.

Another possible topology is that envisaged by Kaminario for its next generation Flash arrays . The idea is to build a storage array with distributed controllers, themselves connected via an RDMA fabric to disk drawers or JBOD NVMf. In such an architecture, each controller has high-performance end-to-end access to all NVMe SSDs installed in NVMf JBODs. It is therefore possible, by aggregating on-the-fly unused disks, to create multiple virtual arrays with the required capacity and performance parameters according to the needs of the applications. Controllers, on the other hand, continue to offer traditional protocols for data access, which makes it possible to maintain a rich range of services.

NVMe over Fabrics can be used like Fiber Channel or iSCSI to connect servers to fast flash storage systems that are equipped with NVMe disks. By adopting such an approach, it is possible as now to build centralized storage systems, simple to administer, but offering performance similar to that which could be offered by local NVMe disks to servers. Only problem, in the current state of technology, it is difficult to combine performance and rich storage services. Most of the NVMe arrays on the market offer high performance, basic volume management capabilities, but no snapshot, replication, or cloning services. All these services must be delivered at the server level by a suitable software layer.

Another possible topology is that envisaged by Kaminario for its next generation Flash arrays . The idea is to build a storage array with distributed controllers, themselves connected via an RDMA fabric to disk drawers or JBOD NVMf. In such an architecture, each controller has high-performance end-to-end access to all NVMe SSDs installed in NVMf JBODs. It is therefore possible, by aggregating on-the-fly unused disks, to create multiple virtual arrays with the required capacity and performance parameters according to the needs of the applications. Controllers, on the other hand, continue to offer traditional protocols for data access, which makes it possible to maintain a rich range of services.

The differences between NVMe locally and NVMf

Essentially, the NVMe over Fabrics protocol takes over the commands of the local PCIe PCI NVMe specification. But in order to manage the differences between the PCIe bus and an extended network fabric, it was necessary to make adjustments. These arrangements are similar in principle to those that had been made to SCSI to enable its operation over LANs (iSCSI). They are summarized in the table below (source: NVM Express, Inc.)

To transmit NVMe commands and responses over a network fabric, the original protocol and message system mechanisms had to be adapted to encapsulate NVMe commands and responses. The protocol provides for the use of a "capsule" mechanism that contains one or more NVMe commands or responses to these commands. These capsules are independent of the fabric technology used.

To identify the storage devices connected to the fabric, NVMe over Fabrics has taken over the familiar iSCSI addressing convention . The qualified name of each device is similar to an iSCSI qualified name (IQN).

NVMe over Fabrics is still a young specification, but the first systems using the standard have appeared on the market, often with proprietary driver elements. As usual, it will take some time for the standard drivers of operating systems to mature to see a wider adoption of technology. This probably means that we will see the first uses in 2017, but that the mass adoption of technology should really be done from 2018.